Telepathy from microphony

New interfaces are becoming possible, a shift not unlike imperative to declarative programming. Most chores you currently do on your phone, you could do easier and better with a natural language interface. It's still impossible to say exactly how these interfaces will look (Siri team gets it together[4] and claims the market dominance they could have in 3 months if they tried? apps add natural language interfaces one by one?). More importantly, it's hard to say how they will be rolled out. But it certainly will involve language, because you're hardwired for language. There's hardware for the last thing you heard and the last thing you said, you evolved for all language, especially speech. In this post, we reconsider ways of getting language into a (mobile) computer (=phone). Right now, for most people, the best option is typing on a mobile keyboard at 36 words-per-minute -- about 1/4 the rate of normal speech. Given the actually hard part of natural language interfaces is solved, this unstable equilibrium will soon collapse.

Voice

Why not just pick your favorite VC-funded[1] voice recognition startup and speak into your phone? For one, none of them even ship phone apps. They know you weren't going to use it, because:

- everyone can hear you! we call this conspicuity.

- voice recognition still fails sometimes

- everyone can hear you, failures aren't frustrating but humiliating

Wristbands

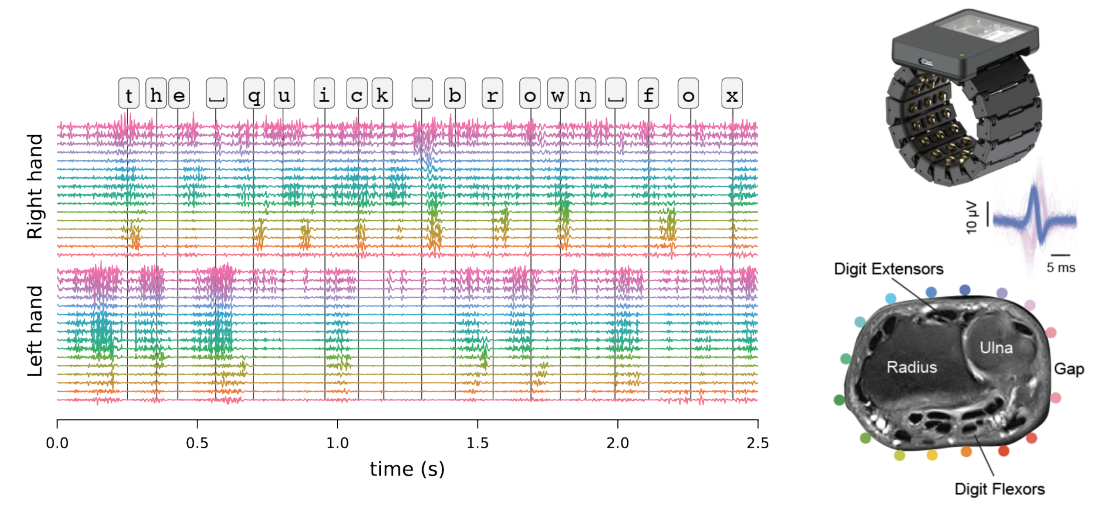

Meta believes in EMG keyboard/handwriting recognition, a wristband that can pick up scribbling or typing [3].

It looks promising thus far! Personally I'm a little skeptical on handwriting recognition over phone keyboards, because I don't think the former is that much more convenient.

You'd replace one unnatural action with another. At the same time, there's a meaningful likelihood some form of the Meta wristband will catch on.

Gaze tracking

Most famously used in Apple Vision Pro, you can navigate by looking at stuff. I found the device insisting that my gaze keep still infuriating and inherently oppressive. Why would you yield your freedom to move your eyes as you please, with the natural flow of your attention? Fine addition to apple's suite of software-defined bondage devices.

BCI

Speech recognition brain implants have impressive results (23.8% WER on 125k word dictionary in a patient who can no longer speak unaided) but they will not become widely adopted this century. Not because of the risk of deadly infection (which is high) or the cost (brain surgery does not scale) but longevity — even the best brain implants just don't last very long. Suppose a BCI leap solves all of these. Is the brain the right place to find intent? <epistemic status: vibes> Experiments seem to show there are at least two of you in there. Controlling your thoughts is actually an entire discipline that takes years of training to reach moderate proficiency. Deriving intent from brain activity seems to require a reconceptualization of what you are. For the purposes of my endeavors (as well as my general conception-of-self) I prefer to think of "intent" as anything that makes it down the spinal cord, and think of the many-faced void soup above as little as possible</es>

Voice, but different?

Is there an inconspicous interface that still has all the benefits of speech? In general, we call this Subvocal Recognition, it's done with neck microphones and SOF dudes currently use them for whisper comms. Firefighters, motorcycle riders and others who work in loud environments or with face-obstructing PPE use them as well. Can you use a neck microphone as a consumer device? Not really, because they are not very good. $300 industry-leading systems still lecture users about placement in training material instead of using a beam-formed microphone array. I tested a couple, and have yet to find a neck mic that performs better than the affordable and convenient DJI Mic 2. As is, it seems neck microphones are a tech-underserved area.

Can we build an even better subvocal microphone? It seems clear to everyone that this kind of tech is coming, and I think that future is ready. Facial sEMG-based silent speech recognition systems can hit 9% Word Error Rate [2] on a 2200-word dictionary, MIT Media Lab's Arnav Kapur gave a 2020 TED talk demoing one such system. In the extreme case, these require no visible motion (subthreshold MUAPs) to recognize speech. Relaxing the "not visible at all" constraint into "not too intrusive", and you reach a technically feasible product.

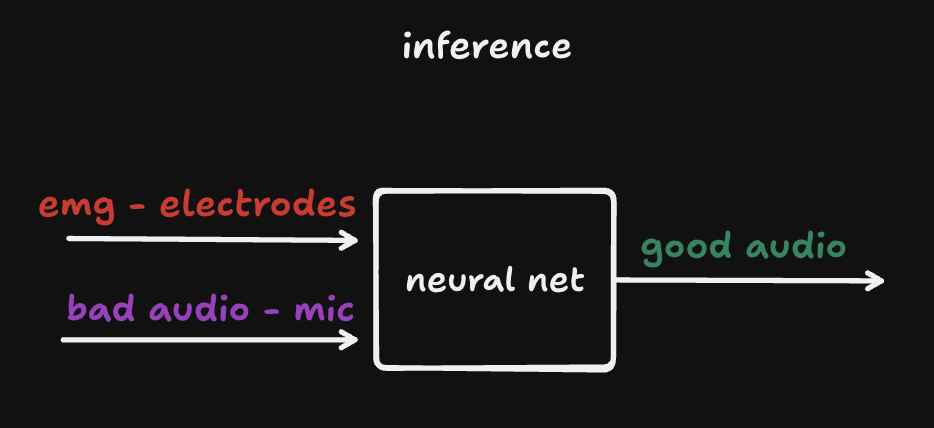

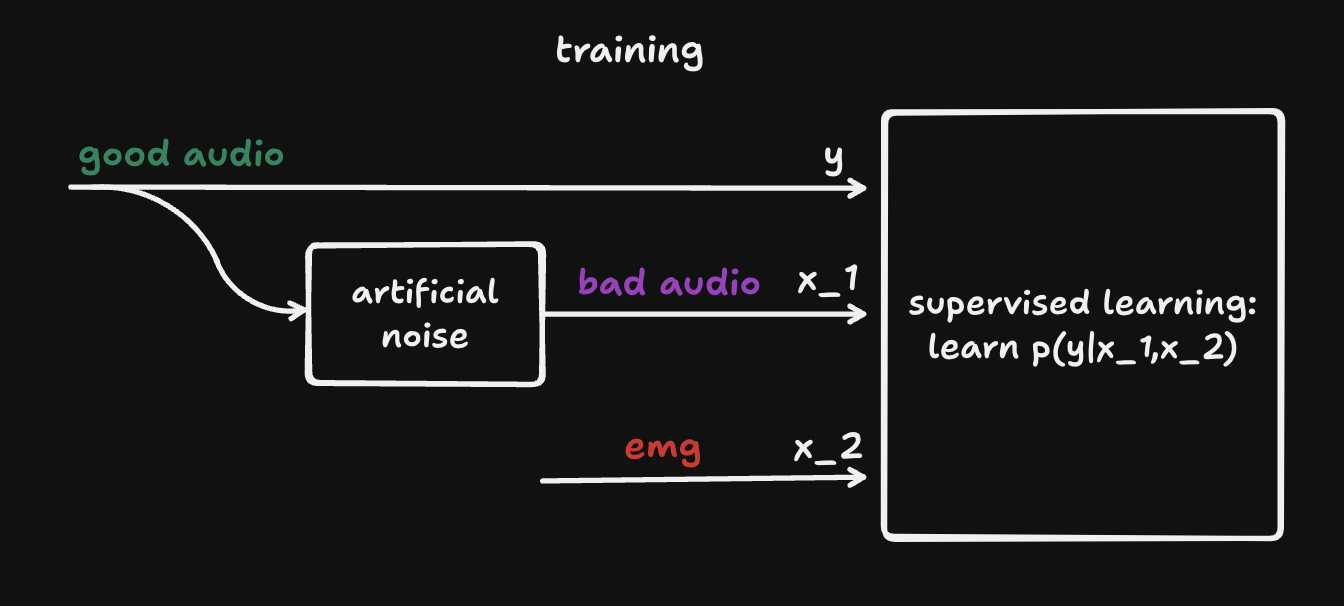

Oh there's one more trick. Researchers obviously can't use a microphone, because that would be cheating. But we're not researchers, our subvocal microphone can actually include a normal microphone! Reformulate the problem from p(word | emg) into p(audio | emg + audio), and you get something like:

- Build a good-looking, beam-formed neck microphone. Include EMG hardware.

- Sell to audio enthusiasts, maybe military

- Train the best sEMG-to-Voice model yet made

- Release free update that makes the microphone 10x better

- Consumer-grade telepathy

A recent windfall gifted me post-employment, so I've been making progress on the plan full time. If this interests you, get in touch! I can't pay you a salary yet, but I've hired talented friends as contractors before, and I'd love to meet someone equally obsessed with this problem!

Footnotes

- My personal favorite is not even VC funded! Makes you think.

- Can you game the Word Error Rate metric? YES! Most commonly it's gamed using a small dictionary, hence I cite dictionary sizes alongside WERs. But this is far from perfect! You can still have the subject speak too slowly, or enunciate too clearly. It's a compromise I'm unhappy with until I find a better metric. Also, if you're gonna read up on this, be on the lookout for "Session Dependent" experiments - it means they did not remove and reattach the electrodes between train and test sessions.

- certainly not their only bet — Orion has a formidable microphone array

- a friend close to the matter tells me this is unlikely

References

- https://stratechery.com/2024/the-gen-ai-bridge-to-the-future/

- https://scale.com/blog/text-universal-interface

- https://en.wikipedia.org/wiki/Desktop_metaphor

- https://dl.acm.org/doi/10.1145/3172944.3172977

- https://www.researchgate.net/publication/336206445_How_do_People_Type_on_Mobile_Devices_Observations_from_a_Study_with_37000_Volunteers

- https://github.com/OpenInterpreter/open-interpreter

- https://betterdictation.com/

- https://wisprflow.ai/

- https://talonvoice.com/

- https://en.wikipedia.org/wiki/Privacy#Privacy_paradox_and_economic_valuation

- https://en.wikipedia.org/wiki/Value-action_gap

- https://www.nature.com/articles/s41586-023-06377-x

- https://pubmed.ncbi.nlm.nih.gov/34847547/